Have you heard about the latest interview cheating method? AI-powered coding applications are now helping candidates pass technical interviews by developing proofs of concept on demand. While I've always disliked coding interviews, I despise dishonesty even more.

I've been rejected by numerous companies including Meta, Uber, and many others, primarily due to coding challenges. These companies focus heavily on evaluating candidates by throwing complex problems at them that literally has nothing to do with their day to day work. It's a flawed system that needs rethinking.

However, I find something even more frustrating than these challenging interviews: individuals willing to compromise their integrity for an advantage. Though I believe the interview system has flaws and deserves reconsideration, cheating undermines the entire process for everyone.

The Solution: AI Detection During Interviews

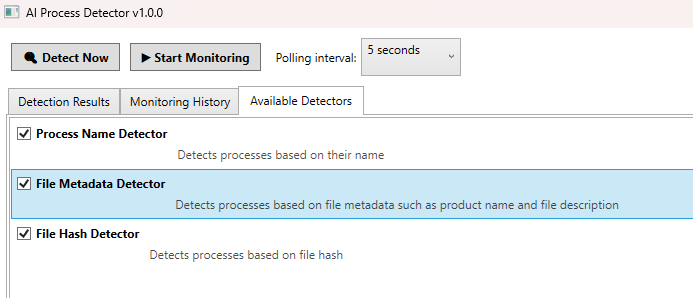

I've developed an application called "AI Process Detector" (aka Interview guardian) that runs during the coding portion of technical interviews. This tool actively monitors for signs of AI assistance without disrupting the candidate's legitimate work.

A simple detection mechanism that should be implemented within interview platforms (Zoom, HackerRank, CodeSignal, CoderPad, Amazon Chime, and Microsoft Teams) includes:

Detection based on AI product names

File metadata detection

File hash detection

This will require approval to access currently running processes. My AI Process detection system can poll or perform ad-hoc scans to detect if AI tools are being used on the system, and if found, request their removal.

One major red flag application that would warrant immediate interview termination would be the usage of Interview Coder, due to its evasive tactics. Interview Coder has been specifically developed to evade detection and generate interview answers on the fly. In the example below, we demonstrate our ability to detect "Interview Coder" using metadata, though there are additional detection methods I've developed that won't be disclosed in this blog post.

I'm planning to offer this detection solution as a product in the near future, though I'm still finalizing the details of how it will be distributed. If you're interested in learning more when it becomes available, subscribe to my Substack and like this post for updates. I'll share more information about pricing, features, and availability soon.

So this means the interview candidate has to download and run your program? Does that not open a whole host of problems in itself?

What if your application opens the candidates computer up to vulnerabilities?

What if the the candidate only has a Linux OS?

What if the tools your detecting change? The creator has already said he's going to randomize the process name. He will randomize that other metadata eventually too.

I think your solution just becomes a cat and mouse chase where the cat kindly requests the mouse to run arbitrary code in order for the cat to make sure the mouse doesn't cheat. The release of interview coder is wonderful because it breaks the old way of interviewing and forces it to keep up with emerging technology.

A better solution to this problem is to do heuristic based detection with external validation. For example, give the candidate a realistic problem (from the actual job without leaking information), ask about previous experience in casual conversations to see if they know what they are talking about, hold an onsite to check for technical coding skills, get references from previous managers who can vouch for them and their work they've done.

That is at least until AGI comes out, then were all cooked 💀.